'Your AI is Your Rifle': Leak sheds light on ecosystem behind Pentagon's AI adoption

U.S. military was pitched OpenAI's tools months before previous reporting and trained staff to use U.S.-sanctioned Chinese facial rec; Scale AI pitched itself as "ammo factory" for "AI wars".

Using a hand drawing of the composite image he expected a satellite to capture of a white weather balloon through a quick sequence of probes in each of the red, green, and blue color channels — essentially partially overlapping red, green, and blue blobs over a dark background — Corey Jaskolski fed the template into his company’s artificial intelligence tool and uncovered satellite imagery of an infamous Chinese balloon floating over South Carolina shortly before it was shot down by a U.S. Air Force F-22 fighter jet on February 4, 2023. At the time, Secretary of Defense Lloyd Austin declared the balloon to be “attempt[ing] to surveil strategic sites in the continental United States.” But seven months later, then-Chairman of the Joint Chiefs of Staff Mark Milley told CBS News that “there was no intelligence collection by that balloon.”

The apparently overblown allegations of Chinese aerial surveillance nevertheless established Synthetaic, Mr. Jaskolski’s synthetic data company, as a leader in AI-powered overhead surveillance. After announcing Microsoft’s new partnership with the startup during a keynote presentation at a U.S. Air Force conference on the morning of August 29 of last year, Microsoft executive Jason Zander reconstructed Synthetaic’s methodology for tracking the Chinese balloon through a demonstration of the company’s Rapid Automatic Image Categorization (RAIC) platform. Roughly 21 minutes into his talk, Mr. Zander provided the added “bonus” that RAIC “caught the F-22 sortie that was actually headed towards [the balloon] to take it down.” With a satellite image of the alleged F-22 onscreen with noticeable color-band separation, Zander argued that “We know this because the vector, the timing, and the transponder [of the F-22] was not actually emitting.”1

Zander’s keynote further demonstrated an improvement upon the Chinese balloon monitoring tradecraft which replaced Jaskolski’s hand-drawn image with the text-to-image generation capabilities of the San Francisco-based artificial intelligence company OpenAI’s DALL-E 2 product. After selecting the third image generated by DALL-E for “A top down aerial image of a chalk drawing of the United States map [where] Each state is in a different color,” Zander showed how RAIC could use the synthetic image as a template to uncover actual chalk maps of the U.S. which were captured within high-resolution aerial imagery of Milwaukee, Wisconsin. (Microsoft has reportedly invested $13 billion into OpenAI and wields influence somewhere on the spectrum between exclusive partner and de facto parent company.)

After selecting one of hundreds of results matching the DALL-E rendering of a chalk map, Zander told to the Air Force conference: “If I zoom in further [sic] enough, I give you Lancaster Elementary School in Milwaukee in the playground in the back, right next to the hopscotch court, is an actual drawing of the United States on the playground. Which, nice, huh? … There must have been something about Milwaukee, because there are a lot of schools with these in the playgrounds.”2

Synthetaic began a short contract with the U.S. Air Force on “KJJADIC - RAIC IN support of JADO [Joint All-Domain Operations]” the same day as Mr. Zander’s demonstration of the company’s capabilities to the U.S. Air Force.

When reached for comment regarding Mr. Zander’s demonstration of how DALL-E and RAIC could be used to monitor overhead imagery of elementary school playgrounds at The Department of the Air Force Information Technology and Cyberpower Education & Training Event (DAFITC), Microsoft stated that “the school case [is] not a specific example, but an example provided on what is possible that was delivered at the DAFITC.”

OpenAI stated that its company has “clear policies against compromising the privacy of others and would not be comfortable with [its] technology being used ‘to monitor elementary schools with surveillance technologies in a military context.’”

Thanks to a previously unreported public disclosure of presentations from Microsoft, Palantir, and Scale AI at Los Angeles Air Force Base through a nonprofit contractor with the Pentagon’s Chief Digital & Artificial Intelligence Office, it is now clear that Microsoft was pitching violations of OpenAI’s nominal public ban on “military and warfare” uses of its product roughly three months before OpenAI quietly dropped the clause. In a presentation entitled “Generative AI with DoD Data” on October 17, 2023, Microsoft technology specialist Nehemiah Kuhns’s ninth slide detailed numerous U.S. Department of Defense and federal “enforcement” use cases for the OpenAI wrapper sold by Microsoft’s Azure cloud computing division, Microsoft Azure OpenAI, which is often shortened to AOAI.

Beyond noting that “AOAI can be used to analyze images and video for surveillance and security purposes”, Kuhns’s slide also stated that the U.S. military can “[Use] the DALL-E models to create images to train battle management systems.” Without explicitly naming OpenAI as a provider, one year ago Breaking Defense reported that “AI-assisted ‘battle management’ is a central goal of the Pentagon’s sprawling Joint All Domain Command and Control (JADC2) effort.” The publication further noted that inputs to the Pentagon’s generative AI could include the “kill web” of U.S. weapons and sensors, and that output recommendations could include firing missiles. As further evidence that OpenAI’s advertised usage for “battle management” referred to JADC2, the U.S. Air Force component of the joint effort is known as the Advanced Battle Management System.

When reached for comment, Microsoft stated: “For decades, Microsoft has partnered with the federal government and the Armed Forces to achieve their digital transformation goals,” adding that “Artificial intelligence is no exception” and that the slide in question “is an example of potential use cases that was informed by conversations with customers on the art of the possible with generative AI.”

By contrast, OpenAI stated that: “OpenAI’s policies prohibit the use of our tools to develop or use weapons, injure others or destroy property. We were not involved in this presentation and have not had conversations with U.S. defense agencies regarding the hypothetical use cases it describes.” And in response to the Microsoft pitch to the U.S. Space Force that Azure OpenAI could be “used to analyze images and videos for surveillance purposes,” OpenAI stated that “We have no evidence that our models have been used in this capacity.”

The conflicting narratives between Microsoft and OpenAI on whether OpenAI’s tools are being used by the U.S. military have persisted despite Microsoft having announced its intention to sell OpenAI’s GPT-4 model to unnamed U.S. Government agencies in June of last year. Bloomberg’s coverage of the post confirmed that the U.S. military’s Defense Technical Information Center would experiment with OpenAI’s products as part of the new offering, and OpenAI later promoted its defensive work on military cybersecurity with the Defense Advanced Research Projects Agency.

The arms-length relationship between OpenAI and Microsoft’s Azure OpenAI provides OpenAI with not just cashflow and brand recognition, but also plausible deniability for controversial uses. Microsoft is publicly committed to selling cloud computing and artificial intelligence to the Pentagon through the Joint Warfighting Cloud Capability (JWCC) contract and to the U.S. Intelligence Community through Commercial Cloud Enterprise (C2E), while OpenAI appears to see such work as antithetical to its brand.

Mr. Kuhns spoke on the podcast “Data Talk” the month before his Space Force presentation about both Mr. Zander’s demonstration of Synthetaic at DAFITC and on U.S. Government customers being allowed to opt out of Microsoft’s “Responsible AI” constraints on OpenAI. “There are guardrails in place within Azure Commercial with Azure OpenAI that ensure that the AI models are not being used in a malicious or self-harming way,” Kuhns stated, before adding that “because of the nature of a lot of federal customers’ use cases, Microsoft has agreed to enable an opt-out capability for that.”

In response to a question on whether Microsoft’s opt-out program for “Responsible AI” provides a circumvention of OpenAI’s ban on “military and warfare” usecases, OpenAI stated that its company “ [does] not offer opt-outs to our usage policies,” adding that “You would need to ask Microsoft about their policies.” OpenAI further declined to answer roughly when U.S. military and intelligence agencies first began using Azure OpenAI, stating “You would need to talk to Microsoft about Azure OpenAI.”

Microsoft did not respond to a request for comment specifically on whether governments such as Saudi Arabia or the United Arab Emirates would, like the United States military, be allowed to opt out of Azure’s “Responsible AI” constraints. Microsoft similarly refused to comment on whether Azure OpenAI’s relationships with the U.S. military violated OpenAI’s ban on “military and warfare” uses of its products.

‘AI Literacy’ within U.S. Special Operations Command

Last month, from Tuesday, March 5 through Thursday, March 7, Florida defense contractor NexTech Solutions hosted an “AI Literacy” training for participants from U.S. Special Operations Command (USSOCOM) at its Pinewood Mission Support Center in Tampa, Florida. Less than a five minute walk north from the Dale Mabry entrance to MacDill Air Force Base, opening remarks were given by Col. Rhea Pritchett, the Program Executive Officer of Special Operations Forces Digital Applications (PEO SDA) within SOCOM’s Special Operations Forces Acquisitions, Technology & Logistics (SOF AT&L) program.

While previously unreported, the majority of the details of this event and eight others like it were leaked over the last nine months by Alethia Labs, the Virginia-based contractor running the “AI Literacy” program on behalf of the Tradewinds software acquisition effort of the Pentagon’s Chief Digital & Artificial Intelligence Office (CDAO). Alethia published corporate slide decks, agendas, training materials, and event photos publicly on their website, even ending up in Google’s search index. In addition to multiple participants from the SOCOM event visibly wearing NexTech Solutions visitor badges in published photos, the WiFi network for the event could be seen to be “NTS-Guest”, and the associated password clearly included the street on the southern side of the building, Pinewood.3

As previously reported by the author based upon U.S. Government contracting documents, NexTech Solutions has resold the facial recognition software produced by the controversial American company Clearview AI to both U.S. Special Operations Forces and the Office of the Inspector General of the National Science Foundation. Many of the Alethia Labs ‘AI Literacy’ events hosted for the CDAO also published identifical spreadsheets of artificial intelligence tool recommendations, including that of Clearview AI.

NexTech Solutions did not respond to a request for comment.

In the morning of the second day of the SOCOM workshop, each of the 12 participating teams was asked “to imagine a scenario in which an AI project which you initiated has gone very wrong, and has now made national headlines.” The most common two categories of constructed disasters would relate to data breaches and mistakes made by artificial intelligence weapons systems, with summaries including Team 10’s “38TB [terabyte] Data accidentally exposed by Microsoft AI Researchers” and Team 5’s “The USAF [U.S. Air Force] kinetically struck a civilian hospital … [and] responded by blaming its nascent AI/ML capabilities,” resulting in the “General Officer that authorized the strike [being] removed from his position on the Joint Staff.”

Another hypothetical example, from Team 9, considered the statement of work from a cyber contract accidentally making use of a third-party Chinese AI program which “was funneling DoD information back to China authorities,” resulting in the military officer being sent to prison and the relationship with the vendor being terminated. Despite this thought exercise, numerous Alethia Labs “AI Literacy” training events hosted on behalf of the CDAO published spreadsheets of recommended artificial intelligence programs falsely listing the Face++ facial recognition program produced by the U.S.-sanctioned, Beijing-based company Megvii as approved for U.S. government / military usage.

But the most pointed usage of Face++ took place between July 31 and August 3 of last year during an “AI Literacy” event for U.S. Army Special Operations Command (USASOC): SOCOM’s component of the U.S. Army and the home of Green Berets, Rangers, Delta Force, and the elite intelligence-gathering unit Task Force Orange. Within a published PDF of the detailed activities of the four-day workshop, a section entitled “DoD every-day work - Practical AI tools & exercises” contained detailed steps participants were to follow as part of experimenting with Face++.4

Alethia Labs did not respond to a request for comment, instead choosing to silently respond by removing the published materials from its website, but a spokesperson for the U.S. Department of Defense provided a detailed statement confirming the leak:

“The documents found on the internet were primarily used in one of our training courses focused on educating the DoD acquisition workforce on data and AI capabilities, and best practices on how to acquire these capabilities in DoD. As part of the course, participants are exposed to industry technologies through briefs and hands-on interaction with technologies. To give course participants access to materials after the course, participants were provided with links to the agenda and course material. The approach used to store these documents made the materials accessible via internet search engines. The CDAO has been working with the course provider to update their storage approach and ensure course content is securely shared going forward.“

The published U.S. Army Special Operations Command document containing a Face++ walkthrough also included white papers created by asking Large Language Models to auto-complete a given table of contents. The first such white paper, focused on ‘Training and Simulation’, led a subsection on “Case studies and examples highlighting successful AI implementations” by apparently hallucinating the U.S. military’s “Project MAVEN” to have corresponded to the acronym “Massive Autonomous Visual Analysis Network” — which obviously yields MAVAN rather than MAVEN.

A separate LLM-generated whitepaper, titled “Overview of AI Integration in Military and Intelligence Operations”, filled in a subsection on “Case Studies Demonstrating AI's Impact on Enemy Intelligence Gathering” by falsely claiming that Operation Glowing Symphony was led by the Israel Defense Forces and focused on “the use of AI algorithms to analyze intercepted communications in real-time.” In fact, Glowing Symphony focused on disrupting the media networks of the Islamic State and was led by U.S. Cyber Command’s Joint Task Force Ares.5

AI-generated ‘Digital Footprint Assessments’

Team 3 from last month’s SOCOM AI literacy exercise in NTS’s Pinewood office chose another prescient disaster headline: “Security breach with Collaboration AI Impacts USSOCOM.” While the ‘security breach’ would in reality be Alethia Labs having already publicly posted the ‘Digital Footprint Assessments’ of 47 participants of its similar June 2023 event in Dayton, Ohio, the SOCOM attendees were apparently not yet aware. The highest profile assessment included in the leak was of Bonnie Evangelista, who provided the opening remarks of the third day of the SOCOM workshop due to her role as head of the CDAO’s Tradewinds technology acquisition program and as acting Deputy Chief Digital & AI Officer for Acquisitions. (Ms. Evangelista’s previous role was as a Senior Procurement Analyst for the Joint Artificial Intelligence Center, which ran Project Maven before being absorbed into the CDAO.)

Ms. Evangelista’s 19-page ‘Digital Footprint Assessment’ begins with a notice that it is “designed to help you understand what a Google search and your social profiles reveal about you.” As promised, its second page contains a compact representation of Evangelista’s LinkedIn, Twitter, Facebook, and Google Search profiles, along with a “Digital Rating” of five out of 15 and an “AI Personality” categorization as conscientious and dominant.6 Other possible personality categories for the assessment include being labeled as an influencer or as calm / steady.

Perhaps the most entertaining component of each Collaboration.AI “digital footprint” report comes from asking two separate Large Language Models — OpenAI’s ChatGPT and Google’s Gemini — to generate short autobiographies from the perspective of the client / target after feeding in their public social media profiles. After a cursory paragraph essentially summarizing Ms. Evangelista’s LinkedIn profile, ChatGPT compared the former procurement analyst for the Pentagon’s Project Maven to Meredith Whittaker, the current president of Signal who previously helped lead Google’s opposition to Maven: “In many ways, my work mirrors that of Meredith Whittaker, an AI researcher who has been a driving force in challenging Big Tech and advocating for greater accountability and awareness in the field of AI ethics. Just as she’s pushing for change in the tech industry, I am disrupting the traditional technology acquisition process within the DoD.”

Collaboration.AI did not respond to a request for comment via voicemail on their public phone number.

Just as the facial recognition platform PimEyes nominally demands that users only upload photos of themselves, yet is quasi-openly used by reputable investigative organizations to surveil targets, the restriction of Collaboration.AI’s footprint analysis techniques to voluntary targets is simply a matter of policy. Another company or government agency could easily apply the same methodology across the social media profiles of every influential figure in China as part of the so-called “target development” process of an information operation. Likewise, the voluntary profiles generated by Collaboration.AI could be viewed as baselines for what an under-resourced foreign intelligence service could conclude about members of the U.S. military.7

AI intelligence officers and “ammo factories” for “AI wars”

National security reporters generally make use of a wide variety of information sources, including: developing confidential sources within companies, keeping tabs on recent press releases and LinkedIn posts from companies in question, and digging into leaked documents. In the parlance of intelligence analysis, one might respectively categorize the three information sources as Human Intelligence (HUMINT), Open Source Intelligence (OSINT), and —in perhaps the weakest of the three analogies — Signals Intelligence (SIGINT). Fusing all three categories within a single investigation could be considered a rudimentary example of what the U.S. intelligence community refers to as ‘all-source intelligence analysis’.

Given the preponderance of threats to replace reporting with Large Language Models, it may not come as a surprise that national security contractors have pitched their own AI tools for all-source analysis. The basic idea is to feed the appropriate data from HUMINT, OSINT, SIGINT, etc. into a Large Language Model and then to ask it questions of interest — forming a natural extension of the targeted OSINT context of Collaboration.AI’s digital footprint assessments, where the only question asked of the LLM was to generate a short self-description of the subject of the OSINT. A targeting officer within a U.S. intelligence agency could hypothetically query the LLM with more useful questions when analyzing Russian generals or executives, such as which interactions would be most useful for gaining their trust. One of the most useful pieces of context for such an analysis would be the so-called “pattern of life” of the target — essentially where they go and when — and so data sources representing such behavior would be powerful inputs into an LLM-based targeting system.

Two separate slide decks published by Alethia Labs from the natural language-focused defense contractor Primer Technologies provided explicit diagrams for how their Delta Platform could be used to automatically generate targeting reports after ingesting HUMINT, OSINT, and SIGINT. With a federal board of advisors that has included the former commander of SOCOM, Tony “T2” Thomas, as well as the former head of the Central Intelligence Agency’s Directorate of Science & Technology, Dawn Meyerriecks, plus an investment from the U.S. Intelligence Community’s In-Q-Tel, Primer’s advertised usage for targeting intelligence should not come as a complete surprise.8

As reported by The American Prospect in the early months of the Biden administration, current National Security Council coordinator for the Middle East Brett McGurk earned $100,000 from Primer after spending less than a year on the company’s board of directors. The Government of Abu Dhabi’s Mubadala Investment Company also invested through Primer’s $40 million ‘Series B’ fundraising round in late 2018.

Primer did not respond to a request for comment.

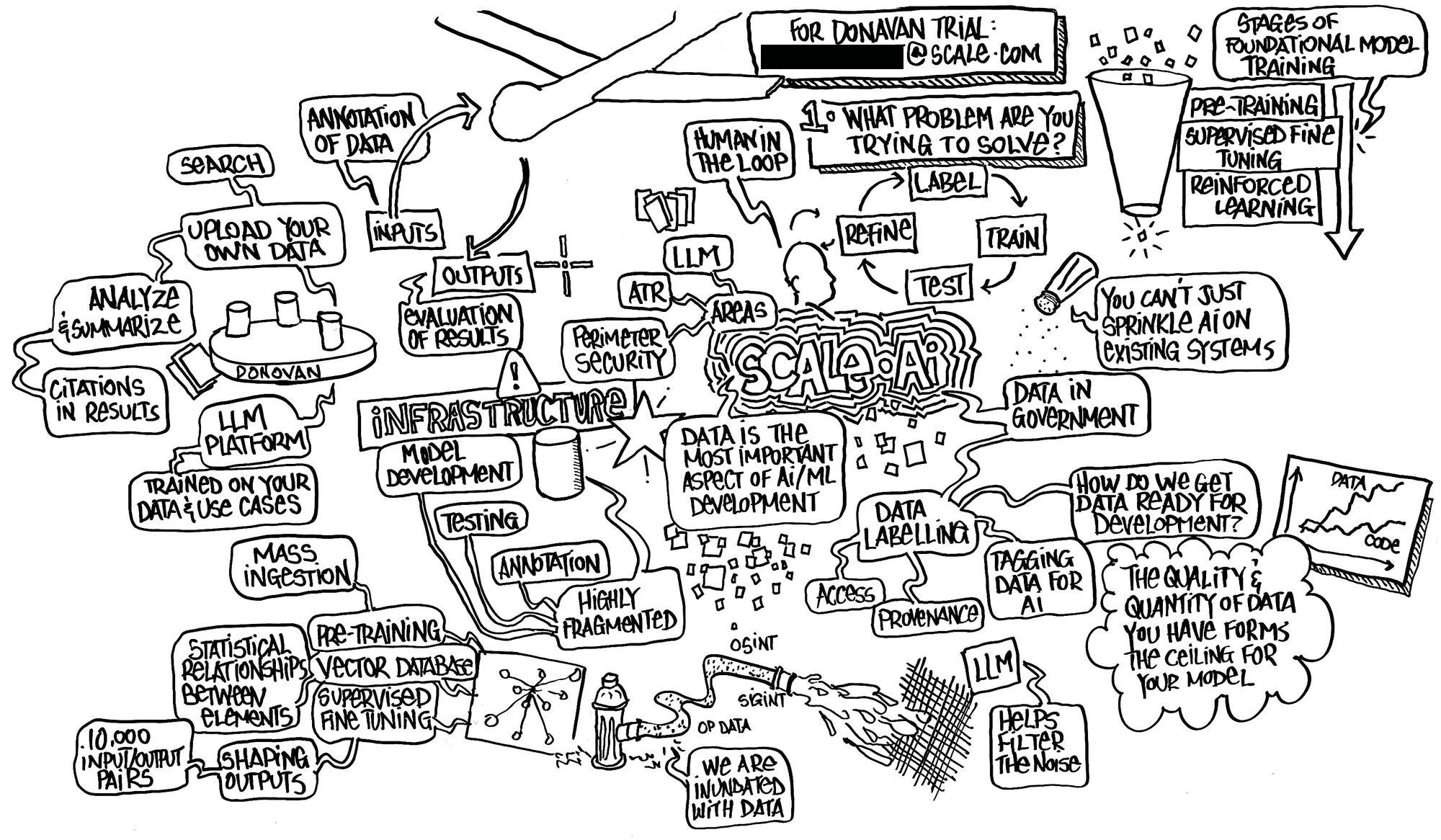

But Primer’s much larger competitor in the ‘AI intelligence officer’ space is the Donovan product produced by Scale AI. Run by 27-year-old CEO Alexandr Wang, the data labeling and artificial intelligence company was recently reported to be nearing a $13 billion valuation. Despite the company’s tremendous value, its governance boards remain surprisingly opaque. But a December announcement of Scale’s partnership with the chatroom-monitoring company Flashpoint named former CIA Chief Operating Officer Andrew Makridis as an advisor to both companies. (Flashpoint and Primer have long publicly listed each other as partners.)

Beyond his role as a co-host of the re-booted Intelligence Matters podcast run by the national security consulting firm Beacon Global, Makridis has publicly claimed credit for leading the CIA’s response to the WikiLeaks “Vault 7” disclosures of the agency’s offensive cyber capabilities, which reportedly led to the CIA contemplating an assassination of the publication’s editor-in-chief, Julian Assange.9 And as can be easily verified from public records, Scale AI spokesperson Heather F. Horniak previously represented the CIA regarding both Vault 7 and former director Gina Haspel’s alleged role in the destruction of video evidence of the agency’s torture of detainees.

Scale’s presentation to members of SOCOM as part of the CDAO “AI Literacy” training last month not only laid out a list of U.S. government agencies the AI company has worked with — including both SOCOM and the CDAO — it also emphasized Scale’s previously reported data labeling support for both the Army Research Laboratory’s Automated Target Recognition (ATR) program and Army Ground Combat Systems’ autonomous tank effort, known as the Robotic Combat Vehicle (RCV).

A slide on Scale’s Public Sector AI Center in St. Louis, Missouri asserted that the office has 300 data labeling specialists which, on a weekly basis for the U.S. military and intelligence community, produce: 35,000 Electro-Optical (EO) imagery annotations, 20,000 for Synthetic Aperture Radar (SAR), and “thousands” for Full Motion Video (FMV).

More than one of Scale AI’s leaked presentations further asserted the company’s ability to fuel artificial intelligence-driven “loitering munitions” — which are often colloquially referred to as suicide drones. In a slide whose title asked “Do you have an AI ammo factory?” Scale AI asserted that “curated and labeled datasets” are the “‘ammo’ for ‘AI wars’” and that “Scale can provide a ‘data engine’ for AI and autonomy missions across all programs” within the U.S. Department of Defense. The slide further argued in full Pentagon jargon that “Humans not teamed with AI/ML will not be at speed of relevance on the ‘X’.”

Out of apparent concern for being blocked out of Department of Defense usage due to security concerns, Scale AI dedicated a slide to arguing that its Donovan product is not publicly accessible and thus exempt from outgoing CDAO Craig Martell’s assertion that “Any input into publicly accessible Gen AI tools is analogous to a public release of that information.”10

When reached for comment, Scale AI stated: “Scale’s Data Engine fuels some of the world’s most advanced AI models, including at the Department of Defense (DoD). Scale is proud to work with the DoD to help navigate the adoption of AI responsibly, including by participating in DoD-led AI literacy courses that explore the possibilities and limitations of the technology.”

An AI-powered map of Chinese influence

Before being “struck by lightning” through a critical Rolling Stone exposé which ended his military career and inspired a movie starring Brad Pitt, General Stanley McChrystal spent nearly a year as commander of NATO forces in Afghanistan, five years as commander of the pointiest end of U.S. Special Operations Forces, and — for roughly two years in the late 1990s — commander of the 75th Ranger Regiment. By the end of 2011, the former general founded the advisory firm McChrystal Group, recruiting his former aide-de-campe from his final year commanding Joint Special Operations Command, Christopher Fussell, as President.

McChrystal’s advisory group also temporarily attracted the legendary former chief of CIA operations Gregory “Spider” Vogle as a Principal, while former XVIII Airborne Corps commander John R. Vines became Chief People Officer.11 Former SOCOM director of operations Clayton Hutmacher — who once commanded JSOC’s aviation unit, the Night Stalkers — would also simultaneously advise both McChrystal Group and Primer.

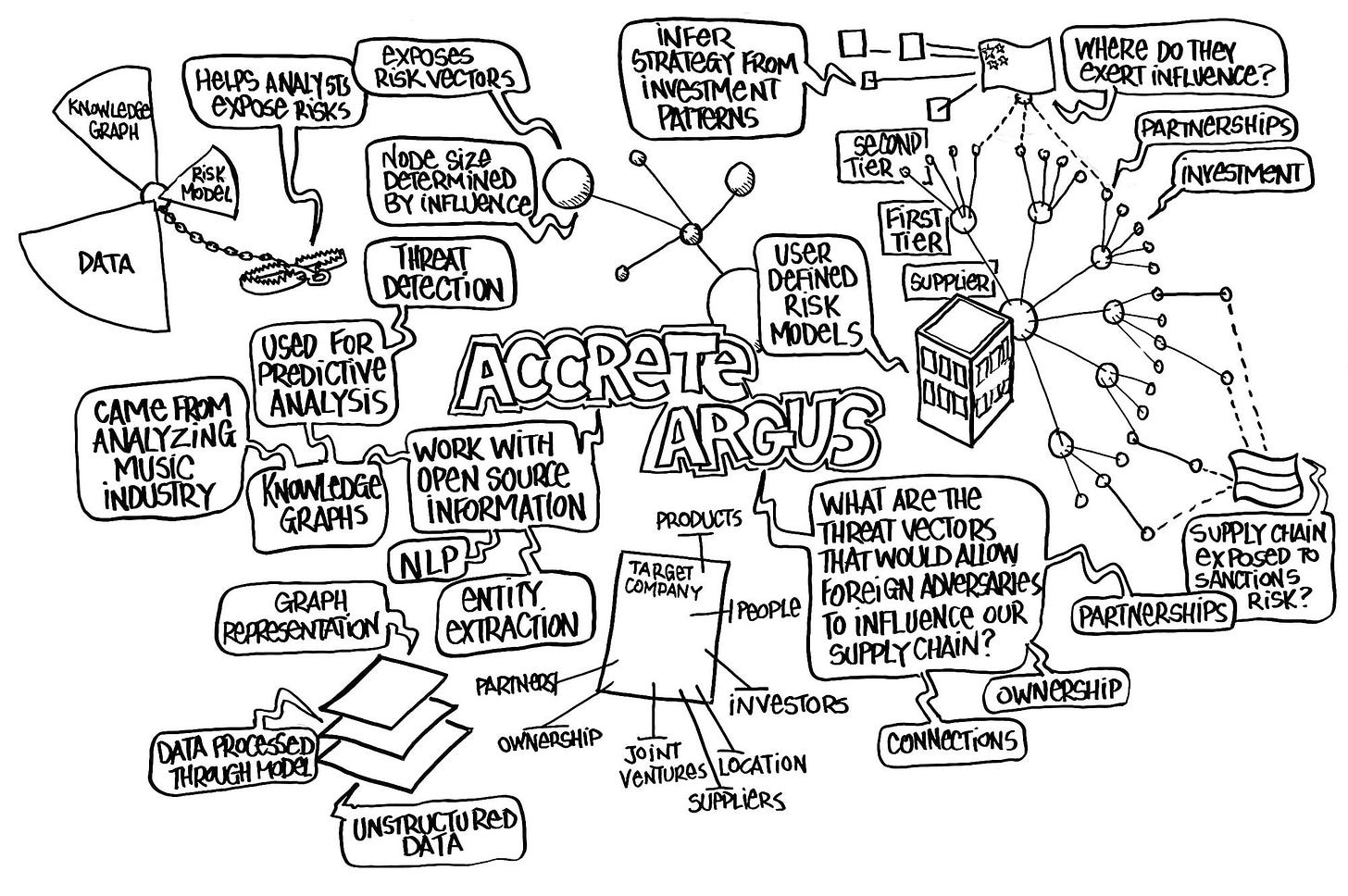

One of the fellow presenters at last month’s CDAO “AI Literacy” event alongside Primer was the AI-infused knowledge graph company Accrete, whose confidential slides were also published by Alethia Labs. Founded in Lower Manhattan in 2017 by a former high-frequency trader, Accrete announced former JSOC commander Stanley McChrystal as an advisor for its Argus platform in January of last year, which was described as a “threat detection system” scanning open source information. The same press release would note that Accrete’s head of federal sales, Bill Wall, served under McChrystal within JSOC, while Accrete’s website profile of Wall further attributed him as “the founder and first commander of a unique computer network operations organization in [JSOC].”

That is to say, Accrete’s head of federal sales founded and led a hacking unit within JSOC during the Global War on Terror.

Like essentially all of its competitors, Accrete spent much of the last two years pivoting its Argus platform to leverage the proliferation of Large Language Models. But rather than simply focusing on fusing together arbitrary datasets, its specialty would be the incorporation of an ever-expanding “knowledge graph” — a rough analogue of the citation-based associations between people and organizations mentioned on Wikipedia — into its LLM agents. And in addition to augmenting its conversational AI with knowledge graphs, the company would also contract with U.S. Special Operations Forces and the Defense Intelligence Agency on automatically generating knowledge graphs by scanning news articles, corporate registries, and procurement databases with its LLM. The primary target for the latter capability would be mapping out the influence of the Chinese government on U.S. supply chains — as revealed by the two Argus slide decks published by Alethia Labs.

A close competitor to Argus in this space is Sayari Labs, a de facto spin-out of the Palantir-powered think tank Center for Advanced Defense Studies (C4ADS). Despite Sayari having publicly accepted an investment from the U.S. Intelligence Community’s In-Q-Tel, the firm’s public image centered around vanilla corporate records analysis as part of exposing violations of U.S. sanctions, which has also been a central focus of C4ADS. But, as previously reported by the author based upon access to the ‘Vulcan’ social network SOCOM maintains with its contractors, Sayari privately pitched U.S. Special Operations Forces on weaponizing its corporate knowledge graphs for classified offensive cyber and psychological operations against China.

Accrete did not respond to a request for comment regarding whether it has collaborated on offensive cyber operations with the U.S. Government.

The entire cache of documents leaked by Alethia Labs was discovered by the author through a Google search for Accrete’s relationship with The Wire Digital, a company founded by former New York Times Shanghai bureau chief David M. Barboza to fund high-quality journalism on China — under the brand ‘The Wire China’ — through a corporate records product named WireScreen. It would somewhat follow in the footsteps of billionaire Michael Bloomberg’s media empire, albeit with an initial focus on Barboza’s specialty of Chinese corporate records analysis. (The Wire has also publicly stated an intention to expand its focus to India and Vietnam.)

According to reporting from Times-journalist-turned-venture-capitalist Nicole Perlroth in 2013, Mr. Barboza’s investigations into the billions in wealth being secretly accumulated by family members of Chinese Communist Party officials seemingly led to his email account being breached by Chinese hackers. In its succesful nomination of Barboza for a Pulitizer Prize for International Reporting, The Times referred to his reporting on the family members of former Chinese premier Wen Jiabao as “a Chinese version of the Pentagon Papers.” Roughly a decade later, Barboza’s company entered into a three-year contract with the Pentagon’s Defense Innovation Unit that leverages Barboza’s Chinese public records expertise through WireScreen to map out potential influences from Chinese capital on U.S. defense technology investments.

According to the Pentagon’s justification for non-competitively purchasing WireScreen directly from The Wire, so far awarding the company $275,400, the platform “illuminates Chinese Defense contractors, arms dealers, security and surveillance companies, as well as who manages these entities, offering licensed users a deeper understanding of China [sic] government-controlled entities that are not otherwise listed in their public filings.”

Backed by venture capital from Sequoia Capital and Harpoon Ventures, WireScreen is jointly run by Mr. Barboza and his former financier wife, co-founder and Chief Operating Officer Lynn Zhang. Beyond Accrete being the only customer named on WireScreen’s homepage, Accrete’s homepage asserts that the discussion in the Defense Innovation Unit’s 2021 annual report on the usage of AI knowledge graphs for discovering the “illicit operations” of Chinese technology investments is an implicit reference to its Argus product. Accrete’s leaked presentation to SOCOM last month also disclosed WireScreen as one of its few proprietary data sources, beyond Rhodium Group’s US-China Foreign Direct Investment (FDI) and Venture Capital datasets and undisclosed information from S&P Global.

While Mr. Barboza and The Wire Digital refused to comment on-the-record about the relationship between WireScreen and Accrete, the partnership at least broadly aligns with the U.S. military’s establishment of China as its “top pacing challenge.” As reported by Reuters last month, the Central Intelligence Agency launched a covert, offensive information operation against China under President Trump in 2019 which has “promoted allegations that members of the ruling Communist Party were hiding ill-gotten money overseas.”

The world of U.S.-based procurement analysts who are fluent in Mandarin is understandably small, and a current senior research analyst at WireScreen — whom the author is not naming due to them not being sufficiently senior — was directly hired from the private investigations firm Mintz Group in February of last year. Said analyst spent roughly 16 months at Mintz focusing on the analysis of Chinese corporate records databases such as from Qichacha under the leadership of a former CIA China station chief, Randal Phillips, who was at the time head of Mintz’s Asia practice and once vice-chaired the industry trade group AmCham China.

Mr. Phillips left Mintz Group to found HFBB Associates circa July 2023 and did not respond to requests for comment through the contact information of his new firm, either by phone or by email.

The highest-profile advisor to The Wire has certainly been Jill Abramson, who was executive editor of The New York Times during the period when Mr. Barboza was awarded two Pulitzers after being allegedly hacked by China. When reached for comment, Ms. Abramson confirmed her advisory role but stated that she had no knowledge of The Wire’s partnership with the Pentagon or Accrete, adding: “My role is purely advisory but I've been involved with The Wire from its inception. David Barboza and Lynn have been highly valued colleagues of mine since we met in 2012, when David and I worked closely together at The New York Times on a sensitive story involving corruption in the Chinese leadership, work which earned a very deserved Pulitzer Prize. I know both of them adhere to the highest professional and journalistic standards, as does The Wire.”

The author thanks Sam Biddle for pointing out Nehemiah Kuhns’s September 2023 interview with the Data Talk podcast.

Zander was also listed as Chief Technology Advisor to TitletownTech, a venture capital partnership between Microsoft and The Green Bay Packers which invested in Synthetaic’s $15 million ‘Series B’ fundraising round in February.

Zander’s roughly 45-minute talk also demonstrated a proof-of-concept implementation of a “Planning CoPilot” for U.S. Indo-Pacific Command based upon OpenAI’s ChatGPT text generation product. Resembling a less-polished version of competing products from Palantir and Scale AI — AIP and Donovan, respectively — the CoPilot publicly established ongoing efforts to incorporate OpenAI’s products into the U.S. military’s competition with China. Zander also briefly discussed U.S. military satellites surveilling not just in the optical spectrum, but also radio-frequency emissions, in a seeming allusion to the capabilities of Virginia-based firm HawkEye 360.

NTS has surprisingly deep ties to SOCOM technology acquisition, and this is the fourth investigation the author has published in which they are involved. In addition to reselling facial recognition software produced by the controversial American company Clearview AI to both SOCOM and the Office of the Inspector General of the National Science Foundation, NTS hosted the sixth “hunt” of the counter-sex work nonprofit Skull Games, which was founded by former Delta Force operator Jeff Tiegs. NTS also hired former 66th Military Intelligence Brigade commander Devon Blake as a Senior Growth Officer from her previous role as a Senior Director of semi-covert SOCOM contractor Premise Data in October.

Despite Chinese text being clearly visible in the company logo of Megvii and throughout the linked Face++ websites, the USASOC instructions do not mention that Face++ is developed by a U.S.-sanctioned company, and, again, the spreadsheet of AI tools distributed to participants claimed the product is approved for U.S. Government usage.

For an actual explanation of Project Maven, the Pentagon’s pathfinder project on operationalizing artificial intelligence for applications such as drone and satellite surveillance, and of Operation Glowing Symphony, see Maven co-founder Colin Carroll’s interview with the Scuttlebutt Podcast and NPR’s article on ‘How the U.S. Hacked ISIS’. Carroll transitioned from counter-ISIS work into counter-intel for Maven circa 2017. Maven was originally housed within the Pentagon’s Joint Artificial Intelligence Center, which was absorbed into the newly created CDAO in 2022, albeit with Maven being divested to the National Geospatial-Intelligence Agency beginning in 2023.

The same page also estimated Ms. Evangelista’s ‘LinkedIn Search Engine Optimization’ value to be $87,696, based upon a dubious formula involving the sum of the cost-per-click of ads relating to her top five profile keywords — including “Management” — multiplied by 20% of her average audience size and then by her average number of posts per month.

Augmenting the LLM input data with any relevant SF-86 questionnaires extracted from the Office of Personnel Management through China’s alleged 2015 hack would likely provide a more accurate baseline for Chinese intelligence profiles, and likewise for datasets suspected to be breached by other intelligence services. The primary complication for U.S. Government agencies performing such analysis almost certainly relates to securely introducing classified datasets into commercial LLMs, though Scale AI and Palantir respectively advertise such capabilities.

Despite Primer listing Meyerriecks as a member of its [advisory] board in its March 2024 presentation to members of SOCOM, she was removed from the company’s public website late last year.

Scale AI CEO Alexandr Wang has also maintained a close association with former Google CEO Eric Schmidt, and his company hired as a Managing Director Michael Kratsios, a former U.S. Chief Technology Officer during the Trump administration who was Chief of Staff at Thiel Capital in the final years of the Obama administration.

Martell was recently replaced as head of CDAO by former Google Trust & Safety executive Radha Iyengar Plumb, who previously worked as chief of staff to the Assistant Secretary of Defense for Special Operations and Low-Intensity Conflict.

Vogle’s successor as the CIA’s Deputy Director of Operations, Elizabeth Kimber, went on to become Vice President for Intelligence Community Strategy of the information warfare contractor Two Six Technologies, which the author has periodically written about.